A very common request (which often becomes a demand) made by clients is a performance guarantee, things like:

- I want all screens/pages to have a maximum response time of 3 seconds.

- I want all queries to have a maximum response time of 3 seconds.

- I want the system to run on my server (when we're lucky, they tell us the server's specifications).

What to do in such a case? When I had just graduated (more than a decade ago) my response used to be: "I need more data", "With the information I have, I can't guarantee that performance", "I don't even know what the algorithm for the indirect sales commission calculation process consists of, how am I going to guarantee that the screen where I see the result responds in 3 seconds???"

It almost goes without saying that my attitude was the wrong attitude...

My questions seemed logical to me, to give a guarantee of a page's response time of 3 seconds was like being told "you're going to transport a box of indeterminate weight, across an unknown distance, using an unknown mechanism and you have to guarantee that you can do it in 3 seconds".

Of course, my response was: If I don't know the weight, the distance, or the mechanism, I can't guarantee the time....

And the inevitable response from my bosses: Don't tell me why not, tell me "what would have to be true to make it happen"... was irritating to my ears.

But they were right, if you're asked for a guaranteed transport time, and you don't know the weight, the distance, or the mechanism to use, what is expected is not that you say "I lack that data", what is expected is that you say under what conditions you could do it in the specified time (if later the client cannot provide them, then it's not your failure).

Of course, this doesn't mean you shouldn't ask. It's always best to ask. But paradoxically, you should ask, but not worry too much whether or not you get an answer. There will be clients who are interested in you building the right thing. Those clients will try to answer your question by giving you the parameters you require. There will be other clients for whom the "right" thing doesn't matter, the only thing that matters to them is that it's done "correctly" (that it's well done, even if it's not useful to them). This second type of clients will often be irritated by your questions, they might even answer "you're the expert, I don't know about this, you tell me what you need to do it in the time I require"... There will be cases where your client is lucky, and the right thing and the thing done correctly will coincide... but always try to do the right thing, but if your recommendations are ignored, don't get irritated, and switch modes. Don't think that your client is "stupid" or "bad" for demanding the "what needs to be true". Ultimately, they are right, you are supposed to be the expert: tell them "what needs to be true", and let them decide whether or not they have the budget.

Thus, all the missing parameters must be assumed. Let's take the most extreme case, they ask for a response time of 3 seconds, and they give you no other clues... and there are various requirements in the system (such as "display commissions for indirect sales"). You don't know how many sales they have, but you know it's a multinational corporation, and they are certainly not "a few."

What to do? Let's go back to the example of transporting a package:

Weight: Unknown

Distance: Unknown

Mechanism: Unknown

Transport time: 1 hour.

What would you do?

Well, you could, for example, say: In the basket of my bicycle, fits 1 liter of water. And in one hour, on my bicycle, I cover let's say 40 kilometers, then:

Weight: 1 kilo

Dimensions: maximum 15 cm square (the volume that fits in the basket of the bicycle)

Distance: maximum 40 km

Mechanism: Bicycle

Transport time: 1 hour.

Now, the big mistake why assumptions like these are considered the mother of all evils is because the one who assumes them keeps them until the day the client wants to send a package, and it turns out that half a ton of bricks needs to be sent 100km away in a maximum of 2 hours... and there's no way in the universe to do that in the basket of your bike...

And then, comes the typical sermon: "don't assume!" "ask!" (Which becomes particularly annoying because you clearly remember asking the client and they never gave you a clear answer)

Was the error in the assumption? NO.

The error was that you did not make your assumption PUBLIC. You didn't verbally discuss it with the client, you sent it by email and tried to get them to sign a document accepting it. Now, of course, some might think: "I would have to list all the possibilities" — what if the package is 100kg, what if it's 10 square meters, what if I have to deliver it in 15 minutes? That's the wrong approach. The correct approach is to give one example, but very clearly defined.

Of course, some will say, there will be something you overlook: what if the user asks to send 1 kilo of uranium? It meets all the criteria, but the radioactivity will kill you! There's no way to think of everything.

Indeed, there isn't. But that's no excuse not to try to define things as precisely as possible. If you strive to define your assumptions as best you can, they will gradually become more complete, and after a few years, experience will allow you to include enough factors so that the possibility of being asked to transport something that violates your assumptions and causes you harm is very remote. If at the beginning of your career as a developer, 9 out of 10 assumptions lead you into problematic situations, and 10 years later, only 5 out of 10 do, that is a success.

Returning to the scenario that interests us as developers, if you are asked: “I want all queries to have a maximum response time of 3 seconds”

Establish assumptions:

- Maximum number of records per query.

- Maximum data weight of the query in Megabytes.

- Network speed (Mbits/s).

- Hardware characteristics of all servers and client workstations involved.

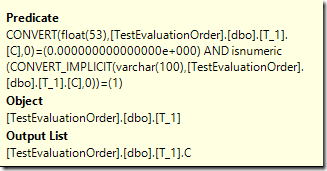

- Type of operation (if it's in SQL, maximum number of records, presence of indexes, maximum number of records in the tables, maximum number of joins, version of the pseudo-RDBMS, etc., etc., etc.).

In civil engineering, if an engineer wants to know the strength of a steel bar 10 cm thick and 10 meters long, they can look it up in a catalog...

Isn’t it time we built that kind of knowledge for ourselves?

As much as I've looked for tables with the above data, where someone has tested certain "typical" hardware/software configurations and basic algorithms”, something like:

If you perform a Join between 2 tables with such and such columns in Sql Server 2008R2, on an i5 server, with 8GB of RAM and a disk of certain characteristics, on a network of... and its combinations (after all, we are programmers, we create algorithms, let's make one that generates X combinations).

Will it be a benchmark that works absolutely? Of course not, but that's not the goal, the goal is to be able to offer a reasonable assumption, and give your client the confidence that if the conditions you set are met, you can guarantee a result.

Then, if something else happens along the way... well, it's not your fault, you couldn't control it, and the number of clients who will understand that you didn't meet the goal because they (or the circumstances) violated your assumptions is (at least in my experience) much greater than those who would understand if you tell them from the beginning "why not" instead of "as if."

Remember, assumptions are not bad. If you ask a civil engineer to build your house, you don't expect to answer their questions about the strength of materials, that's not your job. The job of the consultant (whether a software developer or a civil engineer) is to find the conditions under which "it can be done," and in software, fortunately... or unfortunately, almost everything can be done, if you have the right assumptions.

Originally published in Javamexico